I’m a Fifth-year Ph.D. candidate in the Department of Computer Science and Engineering, Washington University in St.Louis (WashU). I’m fortunately supervised by Dr. Yixin Chen and also closely work with Dr. Fuhai Li. My research areas span Graph Neural Networks (GNNs), large language models (LLMs), and their synergies. Specifically, my research includes:

- Understanding and improving the expressiveness and structure-learning capabilities of GNNs.

- Integrating GNNs with LLMs to design graph foundation models (GFMs). Focus on both architecture, training task, and prompting mechanism design to enable cross-domain zero-shot learning on graph tasks.

- Designing test-time training techniques to strengthen the reasoning capabilities of LLMs on graphs.

- Enhancing the expressiveness of Mixture-of-Experts (MoE) models by introducing structural relationship into experts.

In addition, I am interested in applying graph learning and LLMs to domains such as recommendation, precision medicine, planning, and reasoning.

I am actively looking for full-time research scientist position start at summer 2026. Feel free to chat or shoot me email for discussing any potential opportunities.

🔥 News

- 2025.07: GRIP is accepted by PUT at ICML 2025!

- 2025.04: Passed the Ph.D proposal!

- 2025.01: GOFA is accepted by ICLR 2025!

- 2024.09: GNN4TaskPlan is accepted by NeurIPS 2024, Congratulations to Xixi and Yifei!

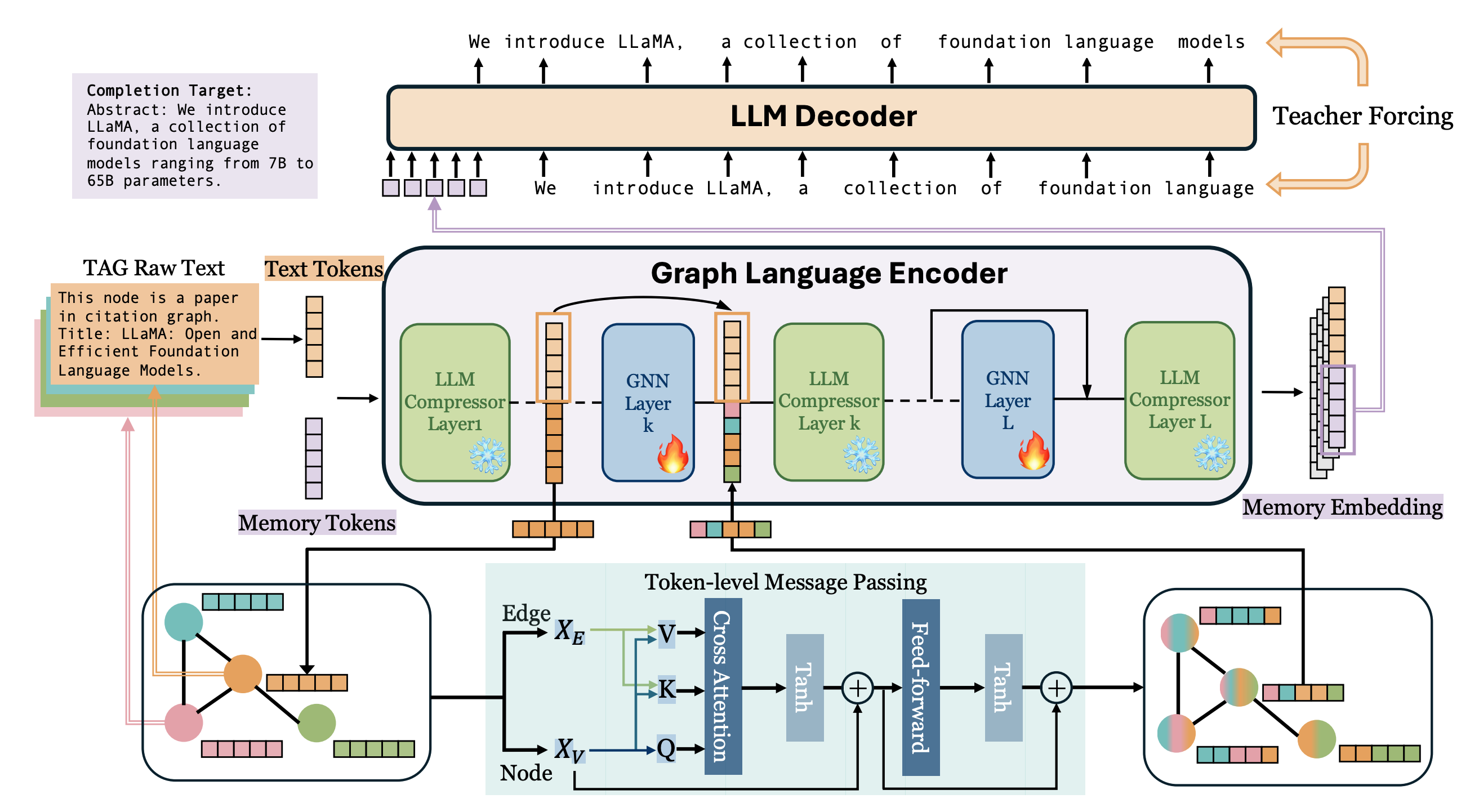

- 2024.08: Check out our newest work on joint modeling of graph and language (paper,code). In this work, we propose GOFA, which interleave GNN layers into LLMs to enable LLM with the ability to reason on graph. We also design multiple novel large-scale unsupervised pretraining tasks for GOFA. GOFA achieves SOTA results across multiple benchmarking datasets!

- 2024.06: We release TAGLAS, an atlas of text-attributed graph datasets. We provide easy-to-use APIs for loading datasets, tasks, and evaluation metrics. The technical report is available in arxiv. The project is still in development, any suggestions are welcome.

- 2024.04: 🎉🎉 PathFinder is accepted by Frontiers in Cellular Neuroscience!

- 2024.01: 🎉🎉 COLA is accepted by WWW 2024! Congratulations to Hao!

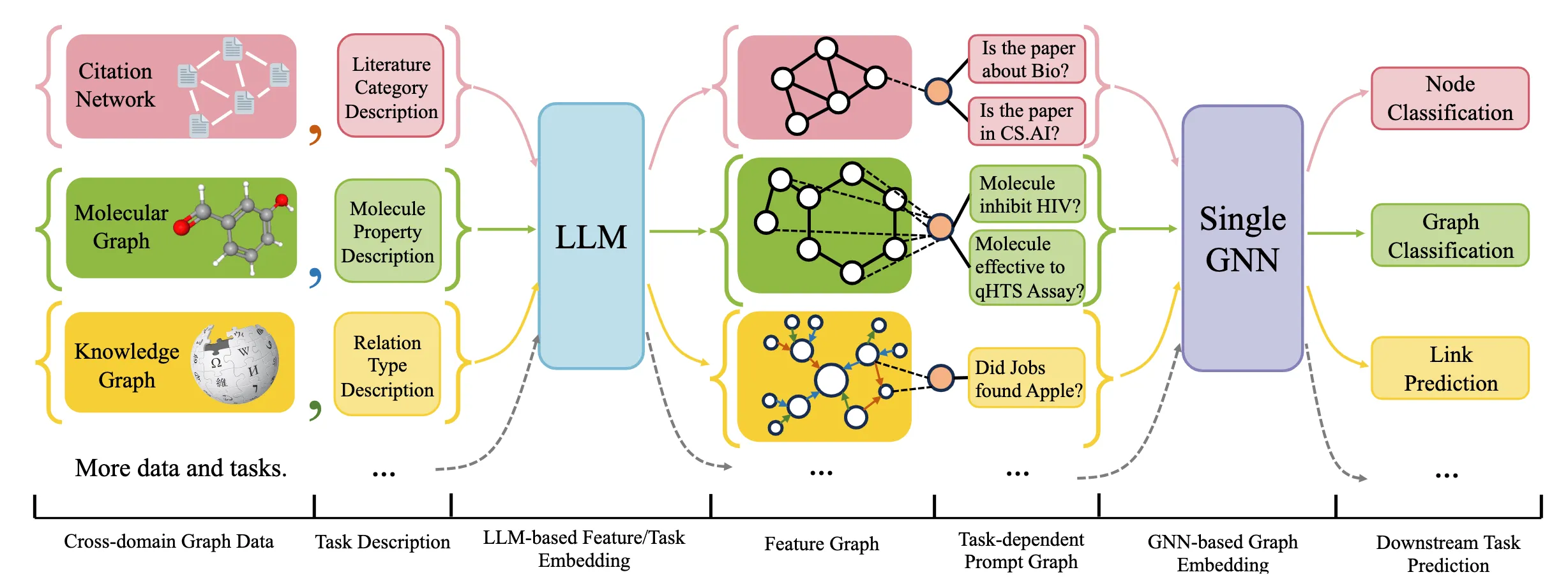

- 2024.01: 🎉🎉 OFA is accepted by ICLR 2024 as a Spotlight(5%)!

- 2024.01: 🎉🎉 sc2MeNetDrug is accepted by PLOS Computational Biology!

- 2023.10: We have developed a novel R Shiny application sc2MeNetDrug for the analysis of single-cell RNA-seq data. This application enables the identification of activated pathways, up-regulated ligands and receptors, cell-cell communication networks, and potential drugs to inhibit dysfunctional networks. Moreover, it provides user-friendly UI for easy usage! Check out our GitHub repository and website for more details. This project is still ongoing, and we welcome any comments or suggestions!

- 2023.10: Leveraging the power of language and LLMs, we propose One-for-ALL (OFA), which is the first general framework that can use a single graph model to address (almost) all different graph classification tasks from different domains. Check out our preprint and code!

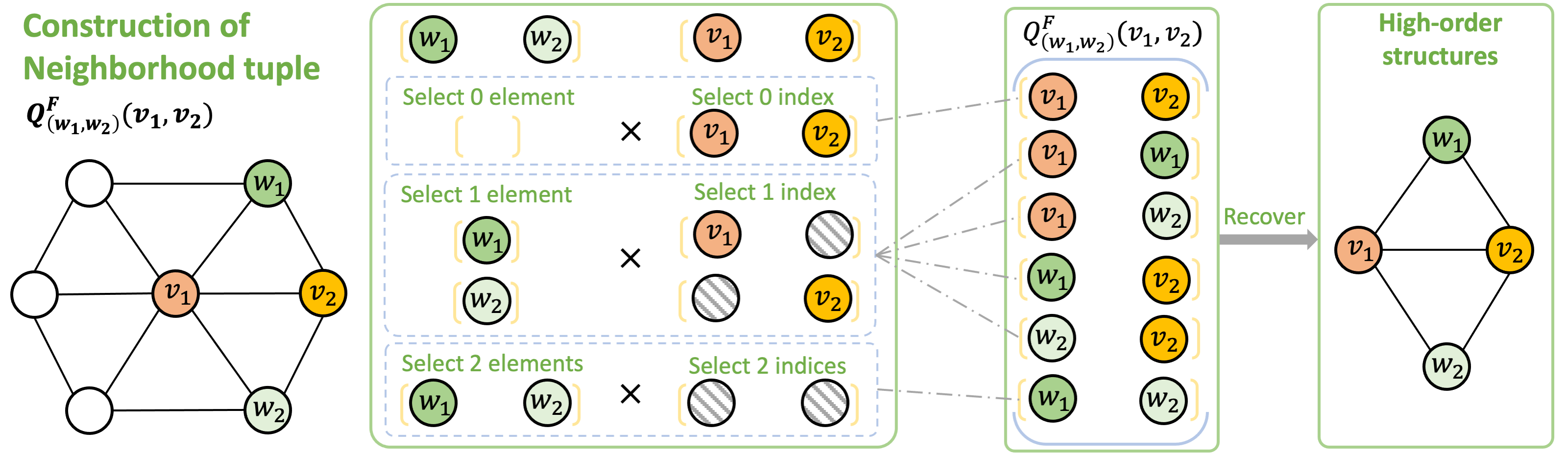

- 2023.09: 🎉🎉 (k,t)-FWL+, MAG-GNN, and d-DRFWL2(spotlight) are accepted by NeurIPS 2023!

- 2023.06: 🎉🎉 Passed the oral exam!

- 2022.09: 🎉🎉 Our paper “How powerful are K-hop message passing graph neural networks” is accepted by NeurIPS 2022. See you in New Orleans!

- 2022.08: 🎉🎉 Our paper “Reward delay attacks on deep reinforcement learning” is accepted by GameSec 2022.

📝 Selected Publications

GOFA: A Generative One-For-All Model for Joint Graph Language Modeling

Lecheng Kong*, Jiarui Feng*, Hao Liu*, Chengsong Huang, Jiaxin Huang, Yixin Chen, Muhan Zhang (* Equal contribution)

One for All: Towards Training One Graph Model for All Classification Tasks

Hao Liu*, Jiarui Feng*, Lecheng Kong*, Ningyue Liang, Dacheng Tao, Yixin Chen, Muhan Zhang (* Equal contribution)

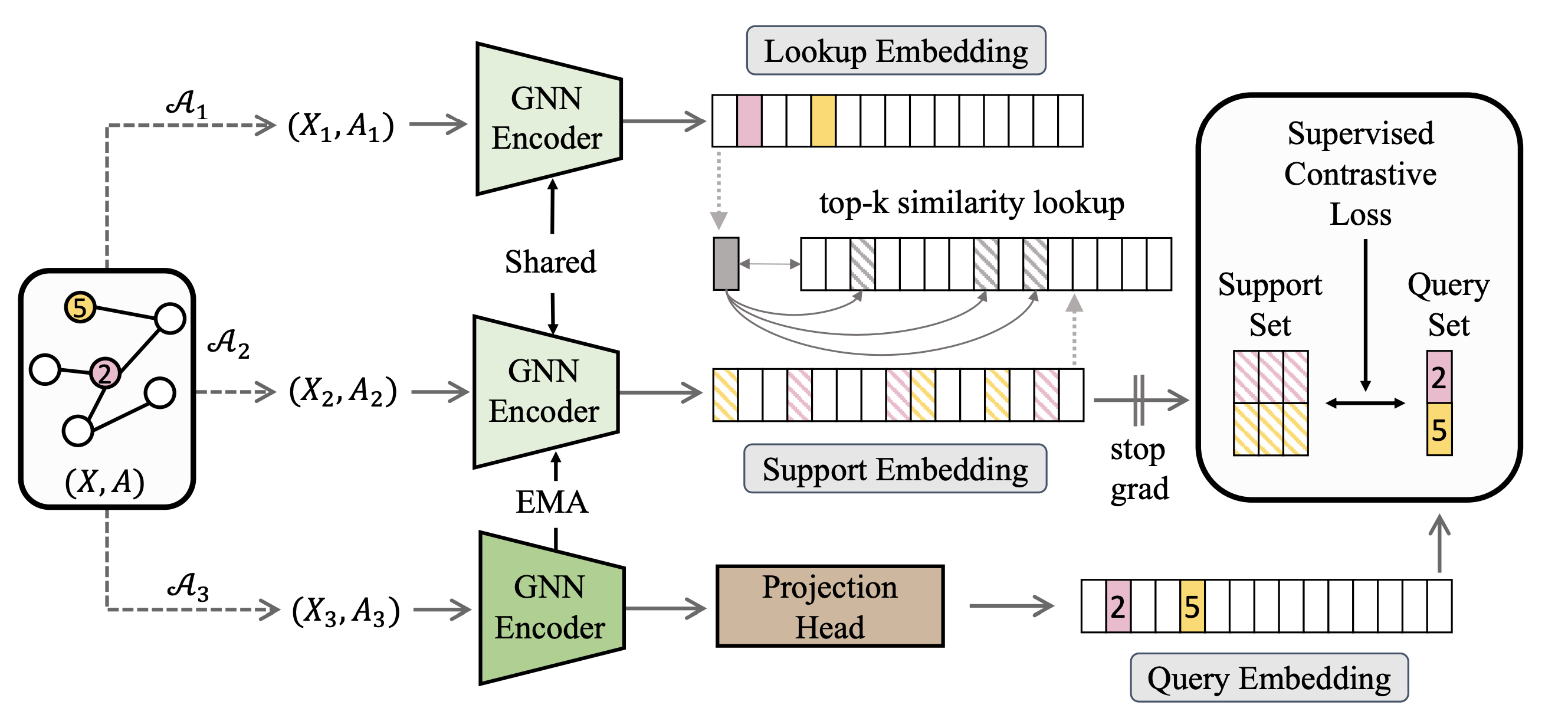

Graph Contrastive Learning Meets Graph Meta Learning: A Unified Method for Few-shot Node Tasks

Hao Liu, Jiarui Feng, Lecheng Kong, Dacheng Tao, Yixin Chen, Muhan Zhang

Extending the Design Space of Graph Neural Networks by Rethinking Folklore Weisfeiler-Lehman

Jiarui Feng, Lecheng Kong, Hao Liu, Dacheng Tao, Fuhai Li, Muhan Zhang, Yixin Chen

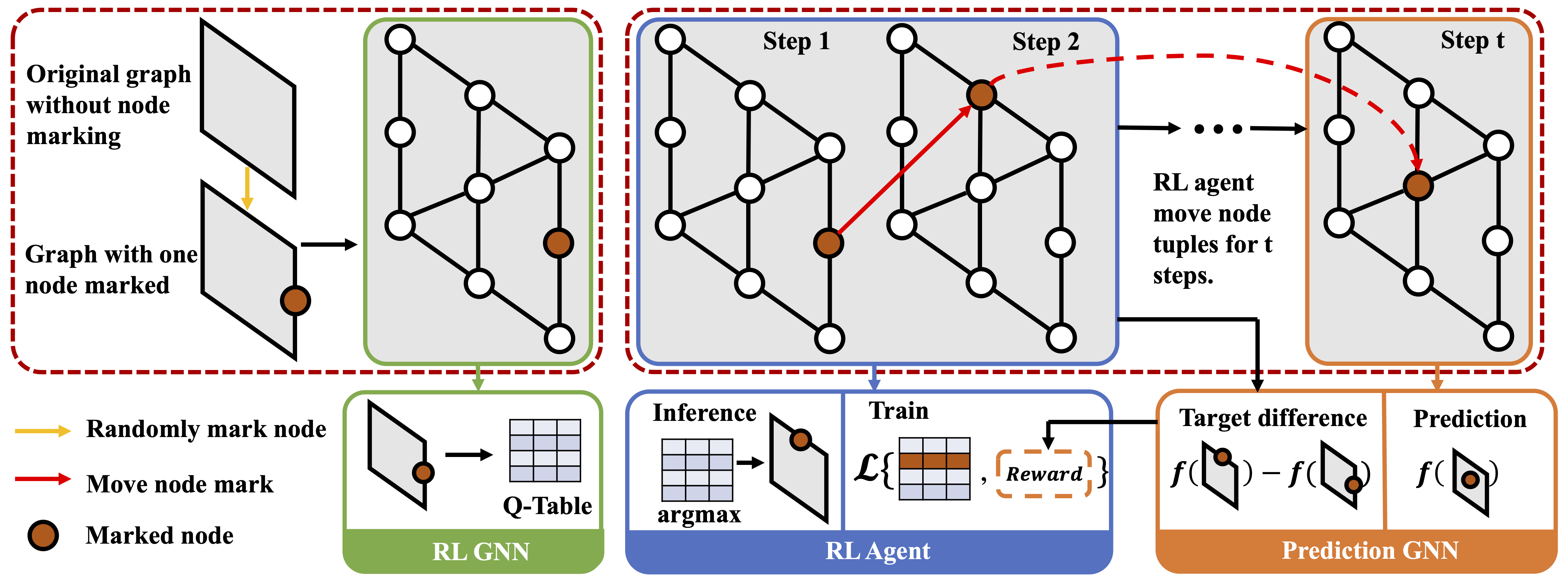

MAG-GNN: Reinforcement Learning Boosted Graph Neural Network

Lecheng Kong, Jiarui Feng, Hao Liu, Dacheng Tao, Yixin Chen, Muhan Zhang

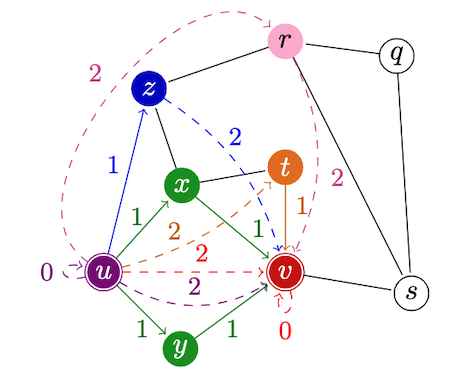

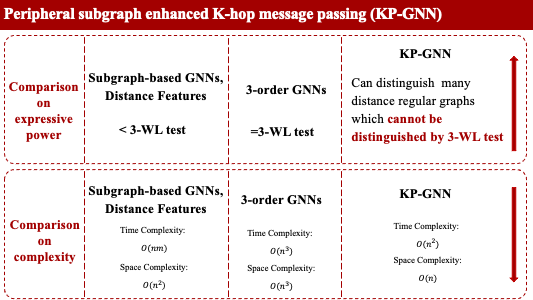

How powerful are K-hop message passing graph neural networks

Jiarui Feng, Yixin Chen, Fuhai Li, Anindya Sarkar, Muhan Zhang

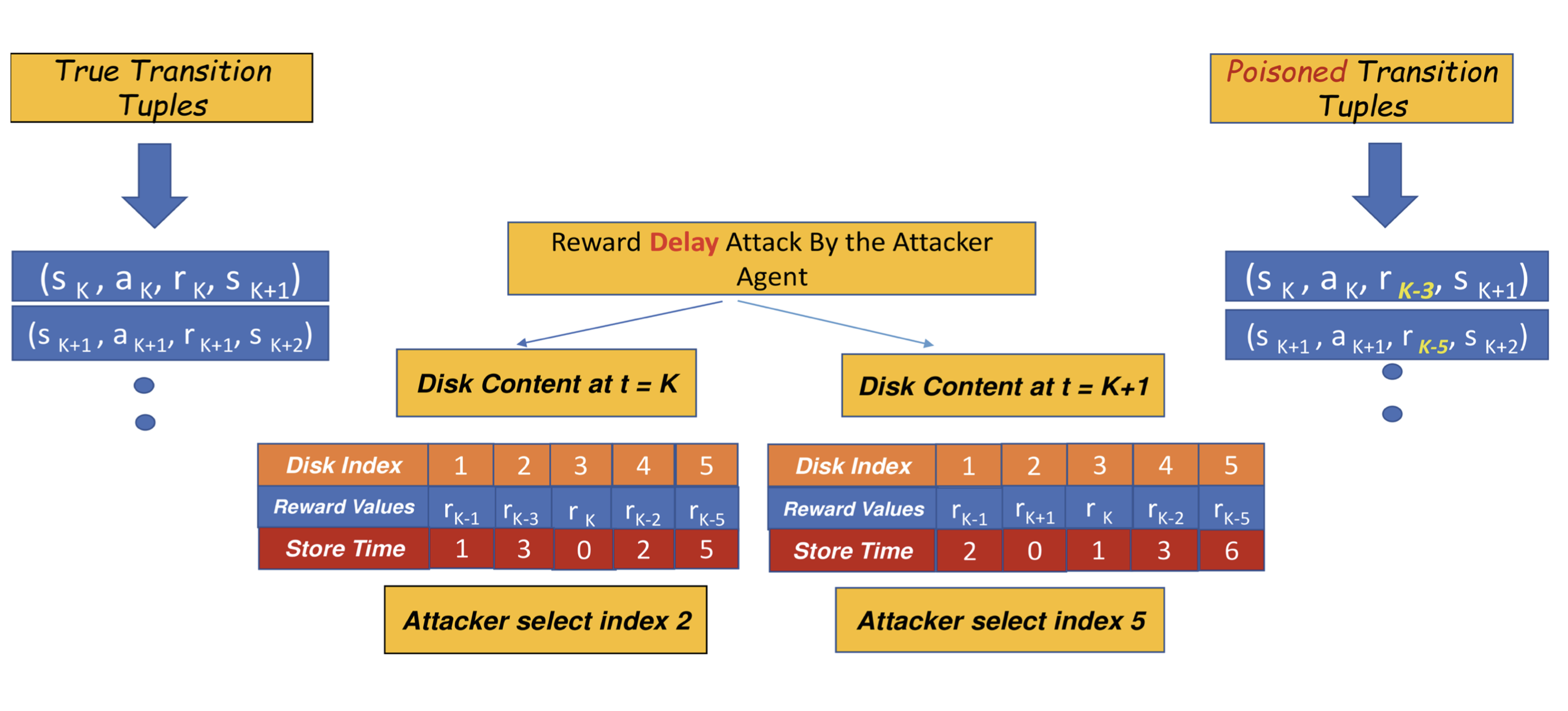

Reward Delay Attacks on Deep Reinforcement Learning

Anindya Sarkar, Jiarui Feng, Yevgeniy Vorobeychik, Christopher Gill, Ning Zhang

You can browse my full publication list in Google Scholar

🎖 Honors and Awards

- 2023.10 NeurIPS 2023 Travel Award.

- 2021.07 ICIBM 2021 Travel Award.

📖 Educations

- 2021.09 - Present, Ph.D student, Washington University in St. Louis, MO, USA.

- 2019.09 - 2021.05, Master, Washington University in St. Louis, MO, USA.

- 2015.09 - 2019.06, Bachelor, South China Univerity of Technology, GuangZhou, China.

💻 Internships

- 2025.09 - Present Student Researcher, Meta, Remote, US.

- 2025.05 - 2025.08 Research intern, Meta, Menlo Park, US.

- 2024.06 - 2024.09 Lab research intern, Pinterest, Remote, US.

- 2019.06 - 2019.08, SWE intern, Alibaba Cloud, HangZhou, China.

- 2018.12 - 2019.02, Data analytics intern, Credit card center, GuangZhou Bank, GuangZhou, China.

🔬Services

- Conference reviewer: CVPR23; NeurIPS23; ICLR24; CVPR24; NeurIPS24; ICLR25; ICML25; CVPR25; NeurIPS25; ICLR26.

🎮 Misc

- Crazy computer gamer: Overwatch, APEX, World of warcraft, PUBG, CS:GO…

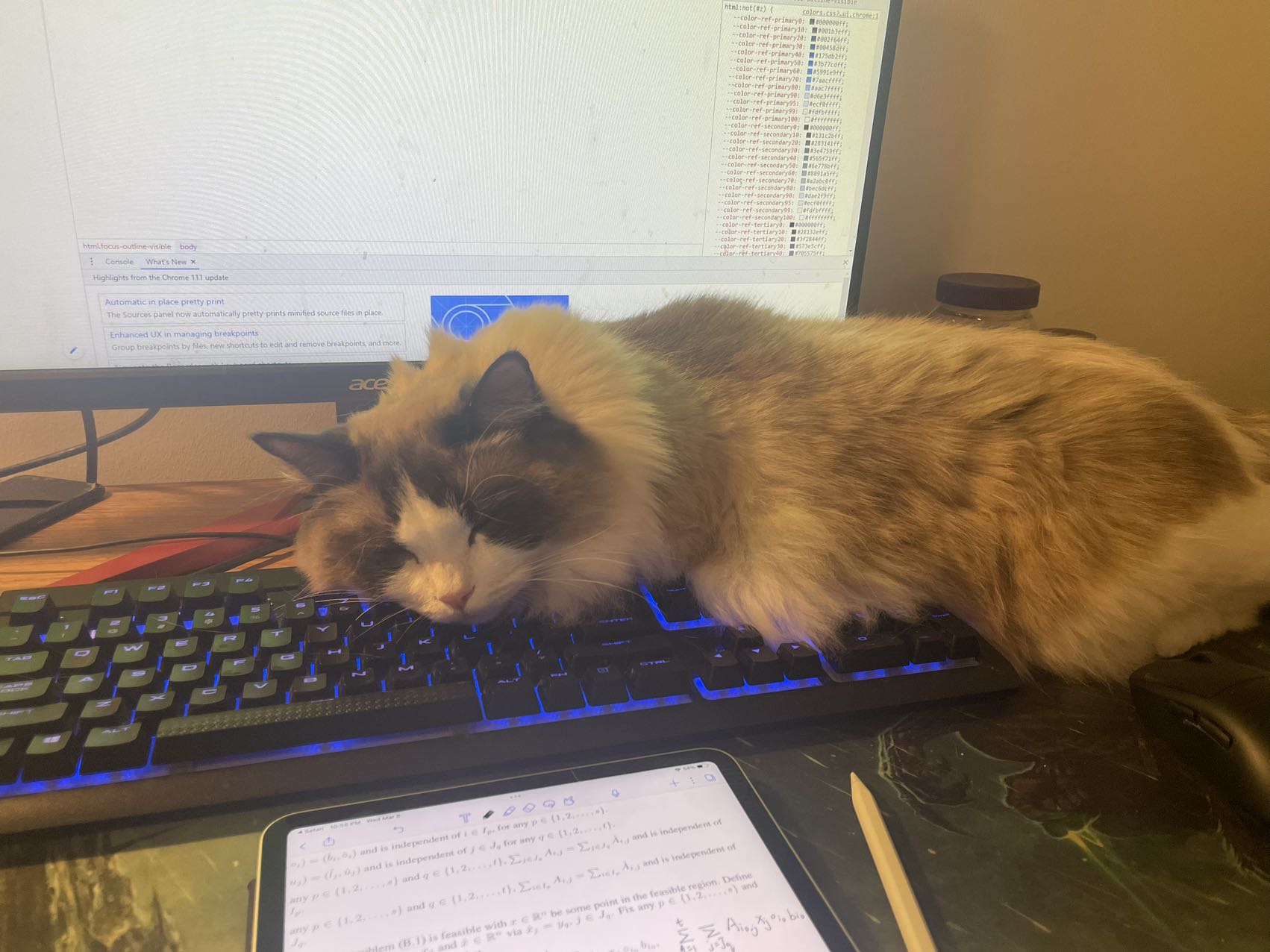

- I have a cute ragdoll called $\theta$, I love him!!!!!!!!!!!!!